On Artificial Intelligence

Out of curiosity, I recently looked into the scientific perspective on Human-AI relationships. In my research, I found that there are generally two groups of people — either you are entirely for, or largely against, the use of AI. I would say that I most likely fall into that second category.

It’s not because I think AI could be a threat — that it’s going to replace human workers and make certain jobs obsolete — in fact, it’s quite the opposite. What concerns me the most about AI is our rapid overreliance on it. Having worked in web development and adjacent to IT/server management for many years, I was able to see firsthand the quick adoption of AI, especially by high-level corporate employees, who thought that AI would be able to replace the “dumb IT worker who sits around doing nothing all day”. Independent of that, I’ve heard stories where students are now using AI for schoolwork (at all levels, but especially in college). Certainly, my university has had to adopt an anti-AI policy just so they can do something about students who abuse the technology. And of course, reports from the tech companies running different AI software (one tends to think OpenAI, Google, etc.) indicate that people are using AI to do simple tasks for them, from deciding what to eat for dinner to writing an email to that coworker or boss they don’t like.

While, certainly, the prevalence of AI art (and people’s seeming willingness to believe in it, although I suspect a lot of that can be attributed to the dead internet theory) is a net negative for the artistic community, and AI fails in critical areas because of the fact that it’s just a glorified predictive text model (I mean, it doesn’t know what it’s saying, or even really what words mean, or, for that matter, what words are), what concerns me the most is the fact that we seem willing — actually, exuberant — at the idea of handing over our lives to artificial intelligence. The core of the matter is that the examples above point to one thing that strikes fear into my heart about the future of our species (and should for you too): we don’t want to think critically, and we may not even want to think at all.

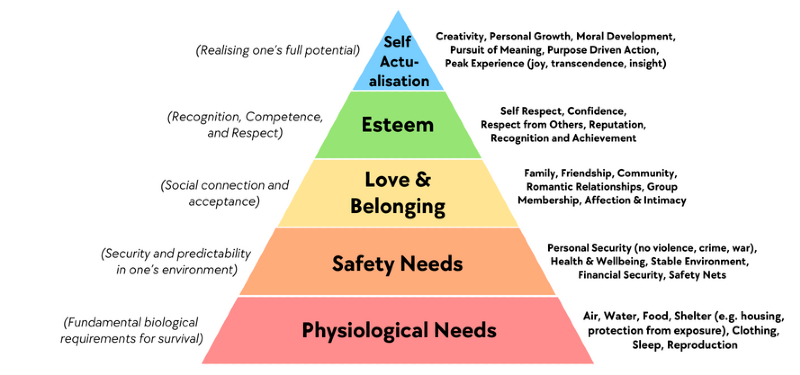

Obviously, everyone talks about the dream of not having to work, and just being able to relax on a beach somewhere, but this goes beyond that. I’m not saying people aren’t entitled to breaks, or allowed to do something they enjoy without the constant threat of financial insolvency over their heads. What I am saying is that we should be concerned with the fact that people increasingly seem to want to give up on life, taking no interest in bettering themselves, or, in the worst cases, taking no interest in their surroundings whatsoever. I understand it’s difficult to keep trying (hell, if anyone’s had low points, it’s certainly me), but I can’t imagine how we’ve got to where we are today, where even the lowest tier on Maslow’s hierarchy is a non-priority.

I also noticed something interesting the other day while viewing a video regarding the use of AI for folded protein synthesis. Despite this being the “correct” use of AI (it solves a problem that’s difficult for humans, doesn’t harm any occupation through its use, and is being used by people who are responsible enough to understand its implications), I still felt unwell at the idea. What concerned me about this revelation, though, is that, if my reaction was this strong to AI, certainly there should be much more of an active debate into the use of AI (the way I see it, it’s near in scope to the global warming/climate change debate), and yet, there doesn’t appear to be.

After thinking on the subject for a bit, I came to a few possible conclusions as to why: either (1) people see AI as an unimportant topic, or other topics as substantially more significant, (2) people who support AI and people who oppose it don’t interact and don’t seek to interact, or (3) there is a concerted effort to cover up “dissent” about AI.

All three of these possibilities are highly concerning; the first points to the widespread existence of that very mindset I was talking about earlier (where people take no interest in their own lives). The third (skipping the second for a moment) suggests that social media companies have a decided interest in promoting AI — this seems conspiratorial and unlikely at first, but becomes more possible when considering the fact that nearly all popular social media apps have close ties to AI developers (both Facebook and Instagram are controlled by Meta, who is developing MetaAI, Google has a deal with Reddit regarding their data API, as well as parent-ownership of YouTube, Snapchat has their own admittedly poor AI chat feature, and Twitter has Elon Musk’s own grok). What’s particularly problematic about this is the fact that many people rely exclusively on these apps to get information, which may be tainted by poor AI training methods or data (one tends to think of the subtle racial prejudice exhibited by many AI programs).

However, I think the most problematic is option 2, which can only be possible if the two groups (that is, people who support and oppose AI, respectively) are primarily composed of different types of people. Now, I don’t want to make a judgement based purely on my own lived experience, and what I’ve heard online (obviously, that poses some issues on its own, as I briefly mentioned earlier), but it seems that the majority of people who oppose AI tend to be critical thinkers, close to tech, and/or in a job which has suffered the effects of AI. Certainly, there are many people in those categories who openly support AI, so it’s absolutely not fair to say something like “all smart people hate AI and all dumb people like it”, or whatever, but, if we look at those first two groups (I’m neglecting the third because no one wants to lose their job to obsolescence), I find it deeply disturbing that (a) people who need to think well, and (b) people who understand how this crap works seem to think AI is a bad idea.

If I had to put a hat on all this, I’m not entirely sure what I’d say. “Be careful (of potentially dangerous technology with wide applications)” is applicable, but it’s also applicable in many other entirely unrelated areas, where it acts as a sort of fear-mongering. I also don’t want to give off any anti-technological sentiment; one of the most dangerous things we can do as humans is to stop the development of something useful because we see it as a minor threat (that is, not a threat to our species or society, but a threat to us personally, or our way of life). So, I’ll leave it with this:

In the coming years, as we see a rise in the capability and use of AI, continue to ask yourself whether AI is a net benefit or harm to the development of humanity. If it is a harm, I implore you to become vocal in creating AI regulation (certainly, it shouldn’t be done away with altogether, and that is as worth opposing as what I am describing), as, if no one speaks up, by the time the world realizes, it may be too late.